SpiTranNet-LIF: A Hybrid Spiking–Transformer Framework for Efficient Motor Imagery Decoding

Authors: M.K. Titkanlou, A. Hashemi, R. Mouček

Abstract & Overview

Motor imagery decoding from electroencephalography (EEG) signals is a fundamental challenge in brain–computer interface (BCI) research, constrained by high temporal complexity, energy inefficiency, and limited generalization of conventional deep learning models. This work introduces SpiTranNet-LIF, a hybrid neural framework that integrates spiking neural dynamics with transformer-based attention mechanisms for efficient and biologically inspired motor imagery classification.

By embedding adaptive Leaky Integrate-and-Fire (LIF) neurons into a spiking multi-head self-attention module, the proposed architecture captures both local temporal patterns and long-range contextual dependencies while enabling temporally sparse computation. The framework is designed for real-time inference and suitability in resource-constrained and neuromorphic BCI systems.

Keywords & Core Concepts

Motor Imagery, EEG, Brain–Computer Interfaces, Spiking Neural Networks, Transformers, LIF Neurons, Neuromorphic Computing, Temporal Attention

Dataset & Signal Processing

Dataset: The model is evaluated on the BCI Competition IV-2a dataset, focusing on binary motor imagery classification (left-hand vs. right-hand). EEG recordings consist of multi-channel signals acquired under standardized experimental conditions.

Preprocessing: Signals undergo band-pass filtering in the 8–30 Hz range, exponential moving standardization, and common average referencing. Trials are segmented into fixed-length temporal windows and encoded into spike trains suitable for spiking neural computation.

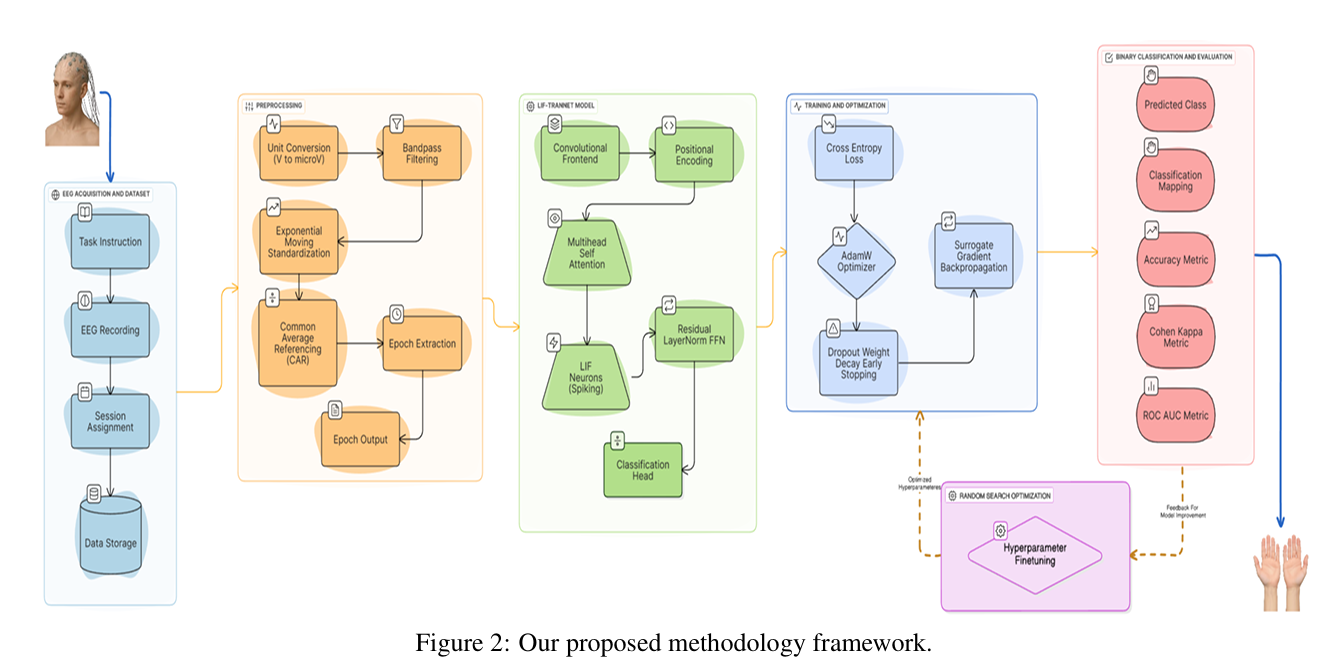

Proposed Architecture

SpiTranNet-LIF integrates convolutional feature extraction with a spiking transformer backbone. Temporal features are processed through spiking multi-head self-attention layers, where adaptive LIF neurons regulate membrane dynamics and firing thresholds.

The network is trained end-to-end using surrogate gradient learning to address the non-differentiability of spike events. This hybrid design enables efficient temporal modeling while significantly reducing computational redundancy compared to conventional transformer architectures.

Evaluation & Results

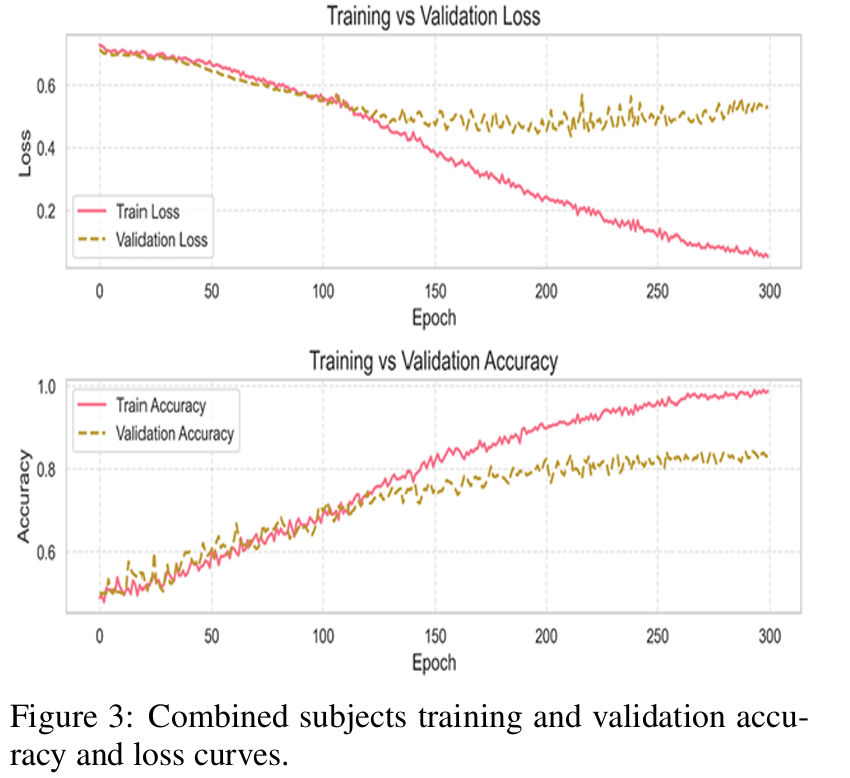

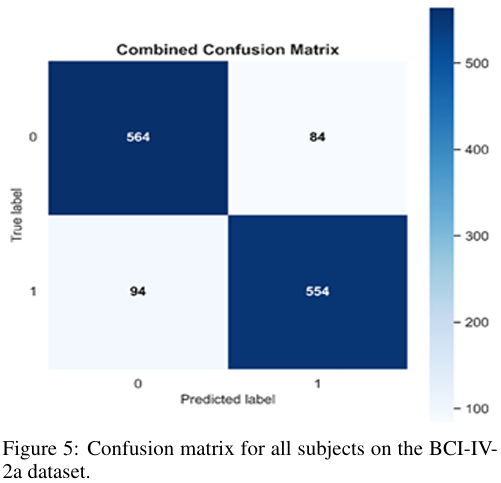

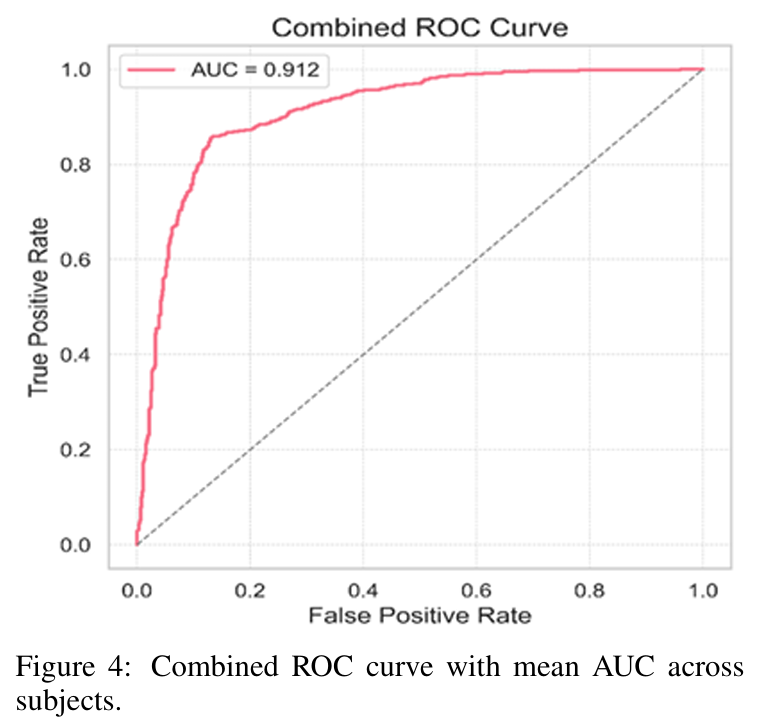

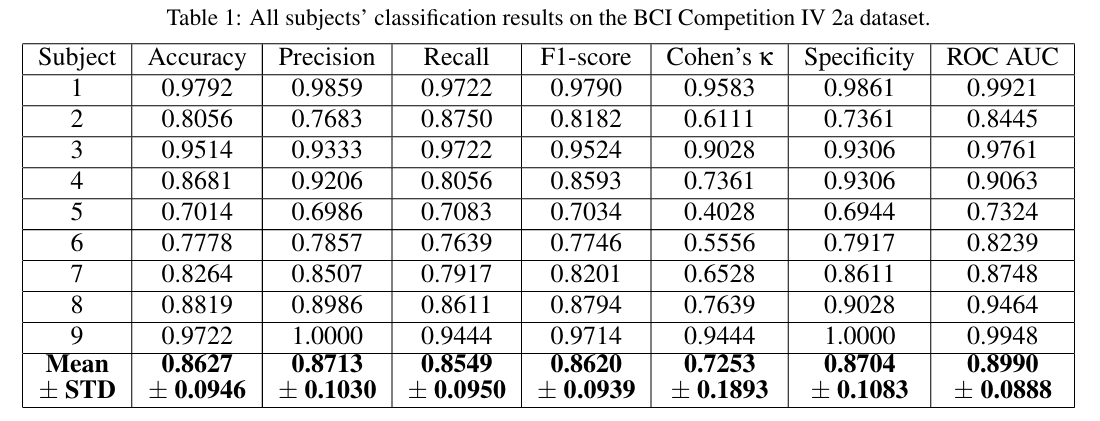

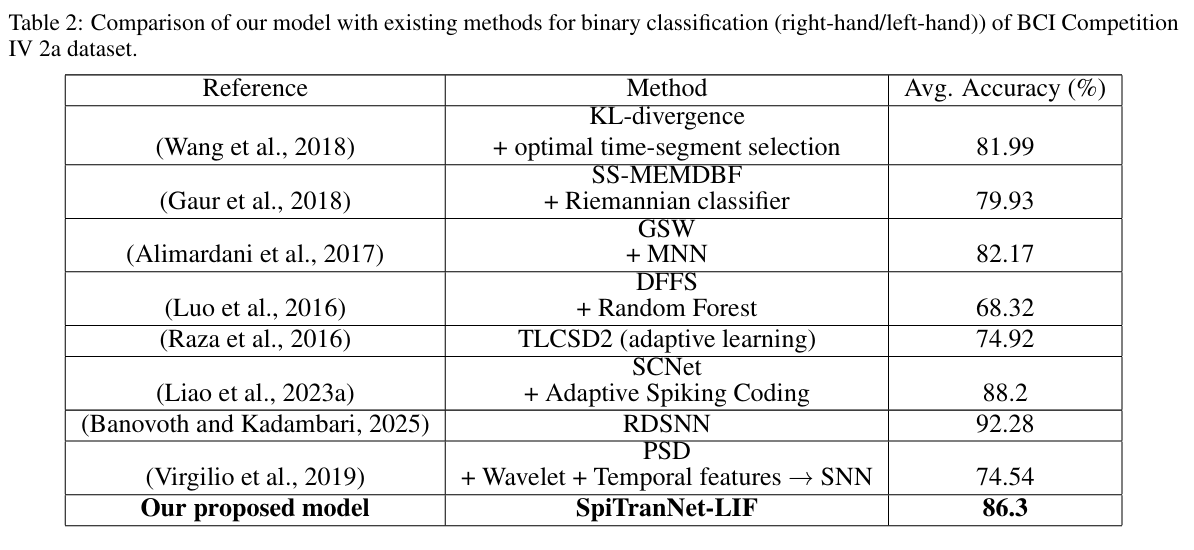

Experimental results demonstrate an average classification accuracy of 86.3%, with a Cohen’s κ score of 0.73 and a mean ROC-AUC of 0.91. Subject-wise evaluations show stable performance despite inter-subject variability.

The model maintains a compact footprint (~600K parameters), low GPU memory consumption, and sub-second inference latency, confirming its suitability for real-time BCI deployment. These findings highlight the effectiveness of hybrid spiking–transformer architectures for efficient EEG decoding.