Breast Cancer Classification using ResNeXt50_32x4d with Hybrid Loss

Authors: A. Hashemi ,K. Azizi ,M.K. Titkanlou ,R. Mouček

Abstract & Overview

Breast cancer remains a leading cause of cancer-related deaths in women, requiring early and accurate diagnosis. Histopathological analysis is the gold standard but is time-consuming and subjective. This study proposes a deep learning framework based on ResNeXt50_32x4d for binary classification of benign and malignant breast tumors using histopathological images.

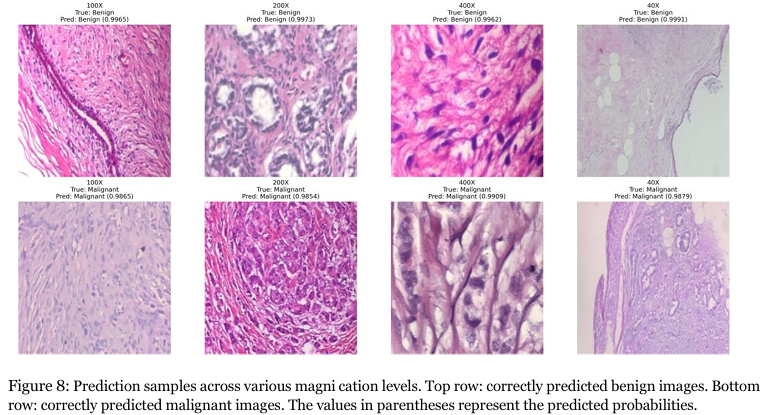

Using the BreaKHis dataset (7,909 images at 40×, 100×, 200×, and 400× magnifications), the model is fine-tuned with a patient-wise split to prevent data leakage. A diverse augmentation pipeline and a hybrid loss function (weighted BCE + hard triplet loss) improve generalization and embedding separability. The model achieves 99.37% test accuracy and AUC-ROC of 0.9976, demonstrating reliable performance across all magnification levels.

Keywords & Key Concepts

Breast Cancer Classification, Histopathological Images, ResNeXt50_32x4d, Hybrid Loss Function, Data Augmentation, Multi-Resolution Analysis, Deep Learning, Transfer Learning

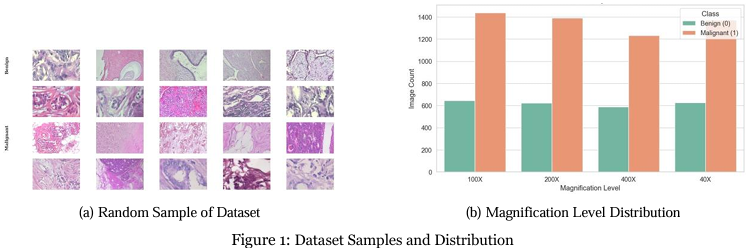

Dataset & Preprocessing

Dataset: BreaKHis dataset contains 7,909 images from 82 patients. Classes: Benign (2,480 images) – adenosis, fibroadenoma, phyllodes tumor, tubular adenoma; Malignant (5,429 images) – ductal carcinoma, lobular carcinoma, mucinous carcinoma, papillary carcinoma. Images are 700 × 460 px, RGB, across 40×, 100×, 200×, 400× magnifications.

Preprocessing: Patient-wise split (70% training, 20% validation, 10% testing). Class balance ensured via oversampling/undersampling. Augmentation includes resize (256×256 → 224×224 crop), flips, affine transforms, color jitter, Gaussian blur, random erasing, MixUp, and CutMix.

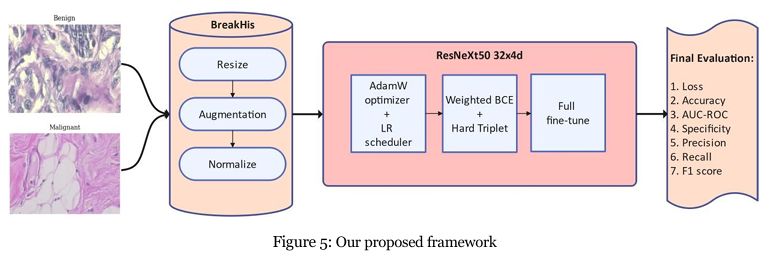

Proposed Method & Architecture

CNN & Transfer Learning: Modified ResNeXt50_32x4d architecture with custom classifier head for binary output. Fine-tuning applied for histopathology images.

Model Architecture: ResNeXt50_32x4d backbone with ResNeXt+CBAM (Convolutional Block Attention Modules after layer3 & layer4). Hybrid loss: weighted BCE + hard triplet loss. Optimizer: AdamW with ReduceLROnPlateau, early stopping, and mixed precision training.

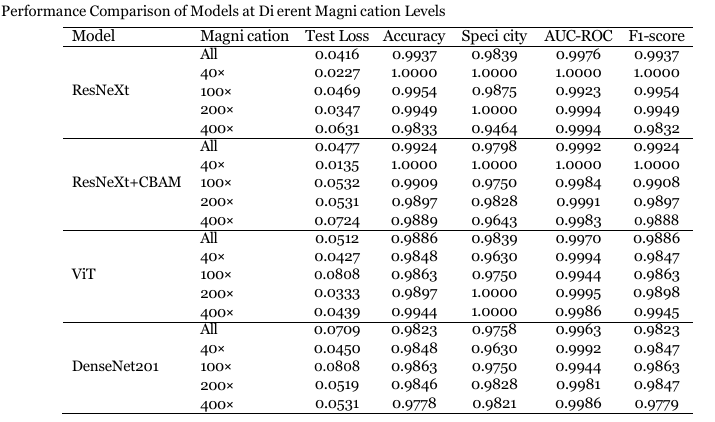

Evaluation & Results

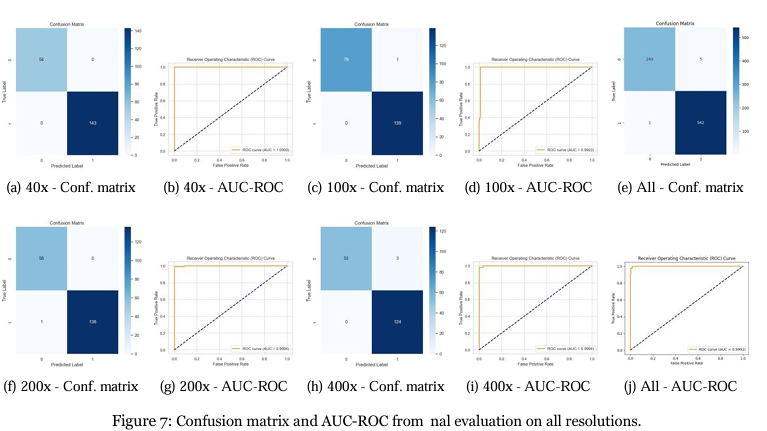

Overall Accuracy: 99.37% (ResNeXt baseline). Magnification-level accuracy: 40×: 100%, 100×: 99.54%, 200×: 99.49%, 400×: 98.33%.

Other Models: ResNeXt+CBAM: 99.24% overall, improved AUC-ROC at some levels. ViT-B/16: 98.86% accuracy, excels at high magnifications. DenseNet201: 98.23%, lower at higher resolutions.

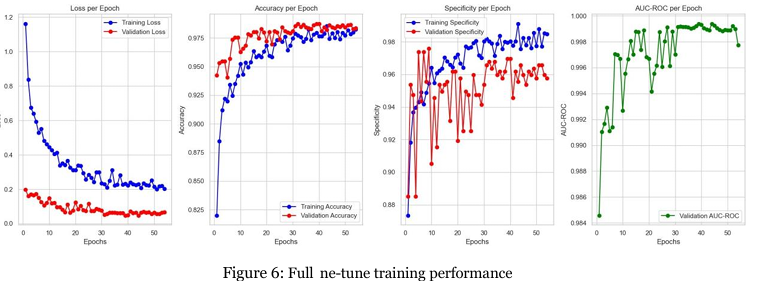

Training curves show fast convergence (~54 epochs) with minimal overfitting. Confusion matrices and AUC-ROC confirm robustness. Proposed framework achieves state-of-the-art performance in binary breast cancer classification.

Hybrid loss + advanced augmentation ensures high accuracy, generalization, and robust embedding separation. Multi-resolution evaluation proves applicability across clinical histopathology images. Future work includes transformer-CNN hybrids, attention modules, and multi-class classification.